Chandra Data System Software — A Retrospective Look

Janet Evans for the CXCDS Software team (Past and Present)

A System Overview

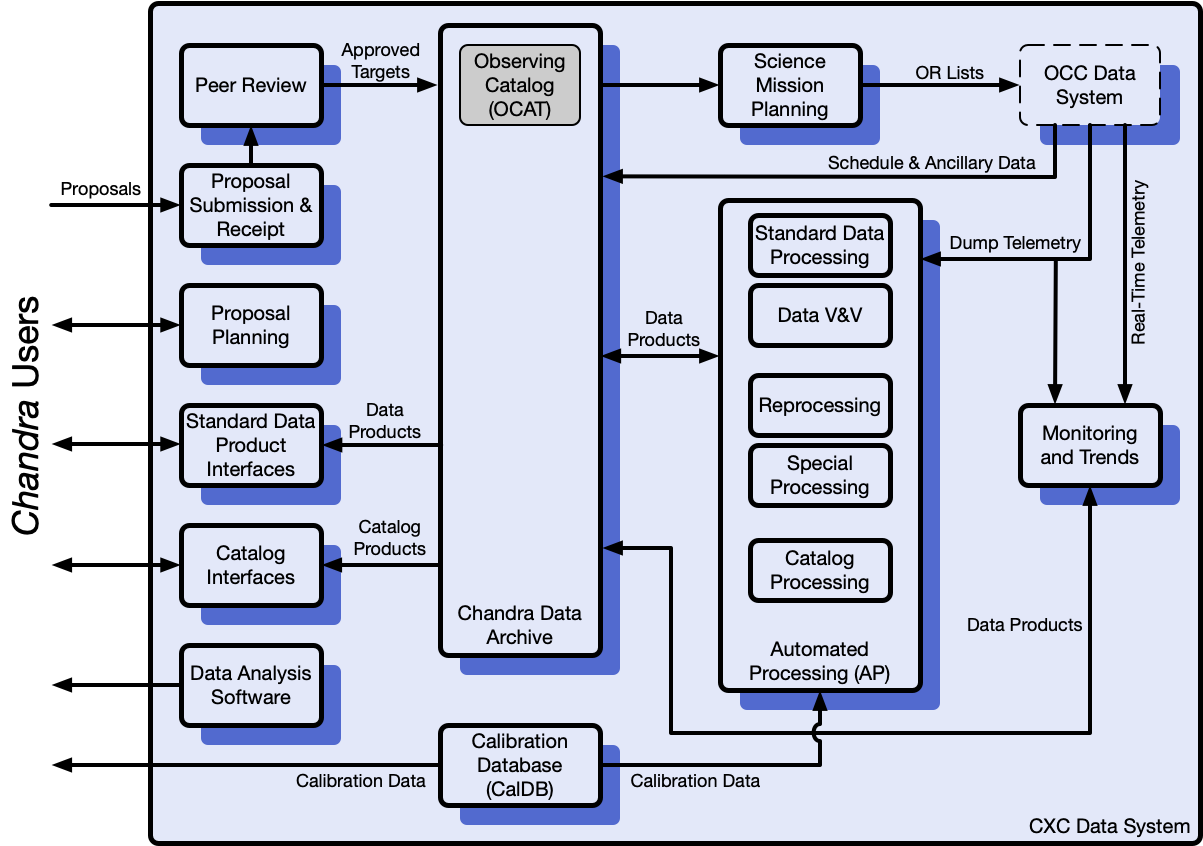

The Chandra X-ray Center Data System (CXCDS) software provides the end-to-end software support for Chandra mission operations (Fig. 1). The team developed, maintains, and supports the software that drive most of the CXC-based forward (proposer to spacecraft) and return (spacecraft to observer) threads necessary to perform the Chandra observing program (for further details, see Evans et al. 2008).

Figure 1: Schematic diagram of the CXC data system components and architecture. The CXCDS automated processing facility is co-located with Flight Operations at the OCC. The Chandra data archive is hosted at the same location and mirrored off site. Proposal submission and receipt, Science mission planning, and science user support are located at the SAO Garden Street location.

The forward thread begins with proposal submission and receipt software that manages development, validation, and submission of user science proposals and their receipt by the CXC. Additional software applications support the organization of peer review panels, assignment of reviewers to panels, conflict checking proposed observations, and managing peer review statistics and reviews. Approved targets are promoted to the observing catalog (OCAT) database in preparation for science mission planning. Mission planning software extracts observations from the OCAT and supports assignment of these observations to weekly schedules in a way that satisfies scheduling constraints stated in the proposals. The resulting observation request (OR) list is submitted to the Chandra Operations Control Center (OCC) for detailed scheduling and command generation. The OCC returns the detailed observing plan, which is compared to the request to validate the schedule.

Receipt of dump telemetry data from the OCC begins the return thread. Standard data processing (SDP) pipelines perform standard reductions to remove spacecraft and instrumental signatures and to produce calibrated data products suitable for science-specific analysis by the end user. Each pipeline includes ~5–30 separate programs, or tools, and several dozen pipelines comprise the SDP thread. Processing is managed by an automated processing (AP) system that monitors data, instantiates pipelines, monitors pipeline status, and alerts operations staff when anomalies occur. Information extracted from the OCAT is used to manage observation processing and to verify that the observation was obtained in accordance with the observer’s specifications. At this stage, any data can be reprocessed as needed (e.g., because of prior errors or improved calibrations), and custom manual workarounds can be applied to any observation that requires special handling.

Monitoring and trends software performs limit monitoring of both dump and real-time telemetry for health-and-safety and triggers real-time alerts of anomalies as configured by the CXC Science Operations Team. Databases support long-term trends analysis to predict future spacecraft and instrument function for forward planning.

The Chandra Data Archive (CDA) securely stores all telemetry and data products created by SDP. Archive software restricts access to proprietary data, performs data distribution to observers, and manages public release of data once the proprietary period expires. Several interfaces to the CDA are supported to meet the needs of the various user communities for access to Chandra data. Chief among these interfaces to the CDA is ChaSeR, a flexible and powerful search and retrieval tool that meets the needs of professional users and incorporates authorized access to proprietary data. Similarly, CSCview is the premier interface to the Chandra Source Catalog (CSC), although CSC data can also be queried using a number of interfaces defined by and compliant with the International Virtual Observatory Alliance (IVOA).

The Calibration Database (CalDB) for Chandra is a HEASARC-compliant, software-accessible data structure that is used in both SDP and the Chandra Interactive Analysis of Observations (CIAO) data analysis software package.

The CIAO software package is the distributable data analysis system for Chandra, and all users of the observatory (internal, guest, and archival) utilize the package to perform further calibrations and science-specific analysis, as well as to extract publishable information from the pipeline data products. CIAO consists of an extensive set of analysis tools, including Sherpa—an integrated application for spectral and spatial modeling and fitting—and SAOImage DS9 for visualization. Some tools perform Chandra instrument-specific calibration and analysis functions, while others are designed to be compatible with a variety of missions while taking advantage of special knowledge of Chandra internals where appropriate.

Software design and development

Software development for the CXCDS began in the mid 1990s and continues to support the mission today. The system consists of ~2 million logical lines of code, including C/C++, Python, SQL, Java, Perl, and a few stray algorithms written in Fortran.

Early development of the system followed a formal systems engineering approach. CXC scientists and software engineers were partnered with an external contractor, TRW (now Northrop Grumman). Together, the CXC and TRW developed system and science requirements that were the basis of the design and implementation of an operational system that met the needs of the project and that was completed by launch.

The system delivered at launch was written for “normal,” “steady” operations and assumed an integrated (all systems) telemetry stream as input. This was not the reality of Orbital Activation and Checkout, where one component is turned on at a time—so we had to improvise. To this day I tell folks that we made it, but also that we had to support 90 releases of the data system in 90 days to add flexibility and enhancements to meet the realities of the live data. A good software patch and deploy system is critical from the start. It took us a few months to meet the other major requirement, which was to process and deliver new observations in one week. It seemed like a huge requirement at the time, and we worked to estimate what was needed for hardware and what could be managed by software runtime to meet the deadline. After several months we had reached that goal. With the increased capabilities of hardware over the years and a robust data system, most Chandra observations are currently processed and delivered to the PI by operations within one day.

The design of the data system architecture was planned in detail to meet the needs of a project that was fairly complex and had many variables. The characteristics that guided the design were modularity, flexibility, compatibility, extensibility, and reuse of off-the-shelf software whenever possible.

The resulting CXC software system is highly modular and follows a layered architecture that consists of layers of software components and modules separated by standardized application programming interfaces (APIs). Each layer hides the details of its internal structure and mechanisms from the other layers. The CDA was fully integrated into the system from the start and fully supported all of the operations functions.

We also worked up front to address maintainability, performance, and, for some parts of the system, portability. So now with twenty-five years behind us, it’s an interesting exercise to reflect on the maintenance and major migrations the system has undergone over the years.

25 Years in Operations

Originally developed on Solaris, the data system was migrated to Linux in 2002. Subsequently, in 2004 a second migration from a 32-bit-based system to a 64-bit-based system was implemented to match current hardware. The structured design and layered approach built into the system provided a solid foundation for these migrations. Migration to Python was also a fundamental upgrade to the system. Python wrapper scripts were built on C libraries to expose them to scripting interfaces (especially I/O libraries), and the science and software teams began writing many tools in Python that are used for operations—many of which are released in CIAO (as discussed in Issue 34). Currently underway, with a scheduled completion this fall, is a migration of the code base to GitLab from the original ClearCase configuration and release management system. We are also modernizing the software build infrastructure and testing framework that will bring the system more in sync with other astronomical systems today.

The Chandra data system has features that parallel more modern software trends, though implemented in a somewhat dated fashion reflecting the technology available in the mid to late ’90s. For example, software “sharing” is a current topic in software circles, whereby two or more users or projects interactively work on the same library or application made available through Git for managing versions on the cloud. CXCDS/SW has engaged in software sharing through the use of Off-the-Shelf (OTS) software. We implemented software sharing using commercial OTS (e.g., Sybase for the Archive, Clearcase for configuration and release management), free OTS (e.g., HEASARC/cfitsio library for data file I/O), and adapted OTS (AOTS) that were imported and modified for Chandra needs (e.g., STScI Hubble pipeline processing system, GSFC proposal system). In all there are ~80 OTS packages that are managed in the CXCDS system reflecting a NASA requirement on the system to use OTS whenever possible to save on software development and cost.

Our Chandra data analysis package CIAO was developed to be downloaded and configured on the user’s home computer. This seemed a big challenge in the early 2000’s, but as systems have become more compatible over time, the challenge has become easier. In the early days, a scripted system managed the packaging and deployment of CIAO to various Linux platforms, while more recently a migration to a more standard Conda packaging system has been adopted.

The overall data system diagram has stayed mainly constant over the years. We have maintained the system and the content of each operational component to stay current with spacecraft changes, telemetry changes, proposal needs, data processing, monitoring and trends, archiving data products, user needs in CIAO and Sherpa, and the recent release of the Chandra Source Catalog 2.1.

Looking back on the past twenty-five years, the CXCDS system has served Chandra science operations well; the up front planning and design has paid off. The team has managed operational changes, OS upgrades, compiler and scripting language transitions, and many more changes over time that would not have been possible without the forward thinking and structural architecture designed from the beginning. We continue to meet the needs of the Observatory and are doing our part to contribute to the astrophysics discoveries of Chandra.